Generative simulation for industries

Generative simulations for robotics, automotive, logistics, and beyond.

Simulation Meets Reality

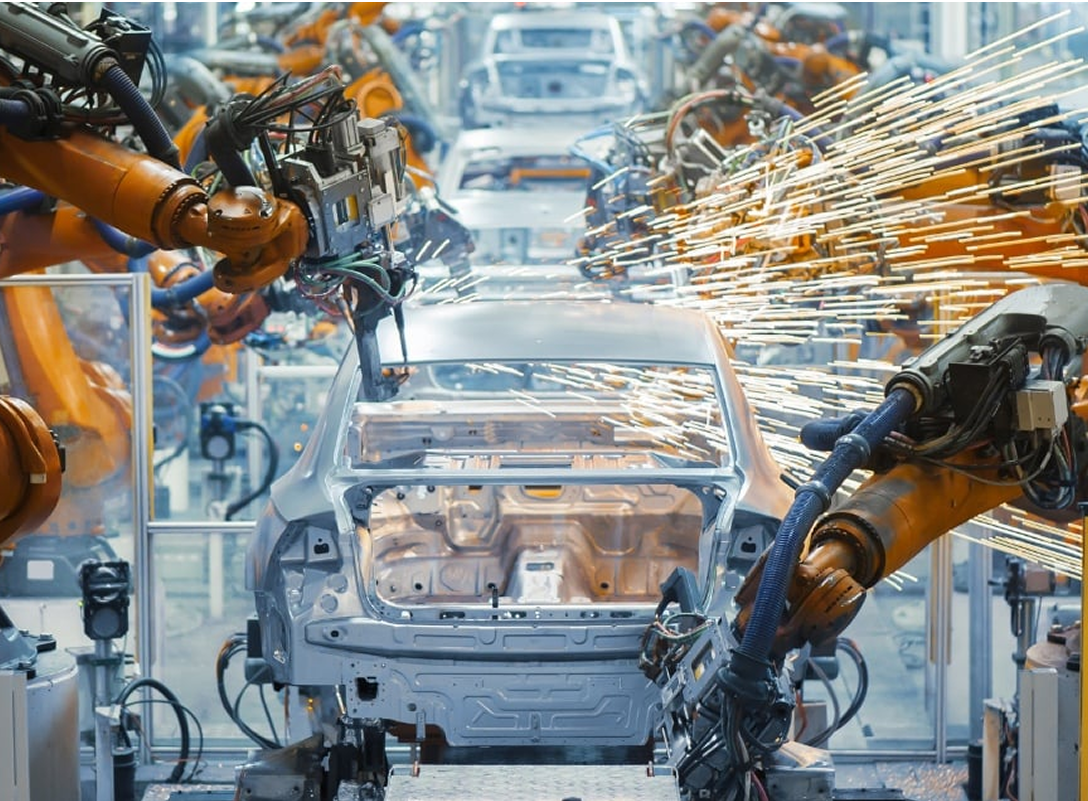

Industrial Automation

Industrial Automation

Virtual Factories. Real Automation

Robotic Arms (welding, assembly)

SCARA & Delta Robots (pick-and-place)

CNC-Integrated Bots

Mobile Manipulators

Validate assembly workflows

Train in precise, multi step operations

Run HRI and fault-handling scenarios

Logistics & Warehousing

Logistics & Warehousing

Train robots to move goods like clockwork

AMRs & AGVs

Sorting Robots

Pick and Place Vision Bots

Palletizers

Optimize fleet coordination

Test last meter navigation

Run HRI and fault handling scenarios

.jpg)

Defense & Aerospace

Defense & Aerospace

De-risking high-stakes autonomy

UGVs and UAVs

Recon Drones

Surveillance Crawlers

Tethered Inspection Units

Test in tactical or unstructured terrain

Validate mission logic

Train multi agent recon and AI perception

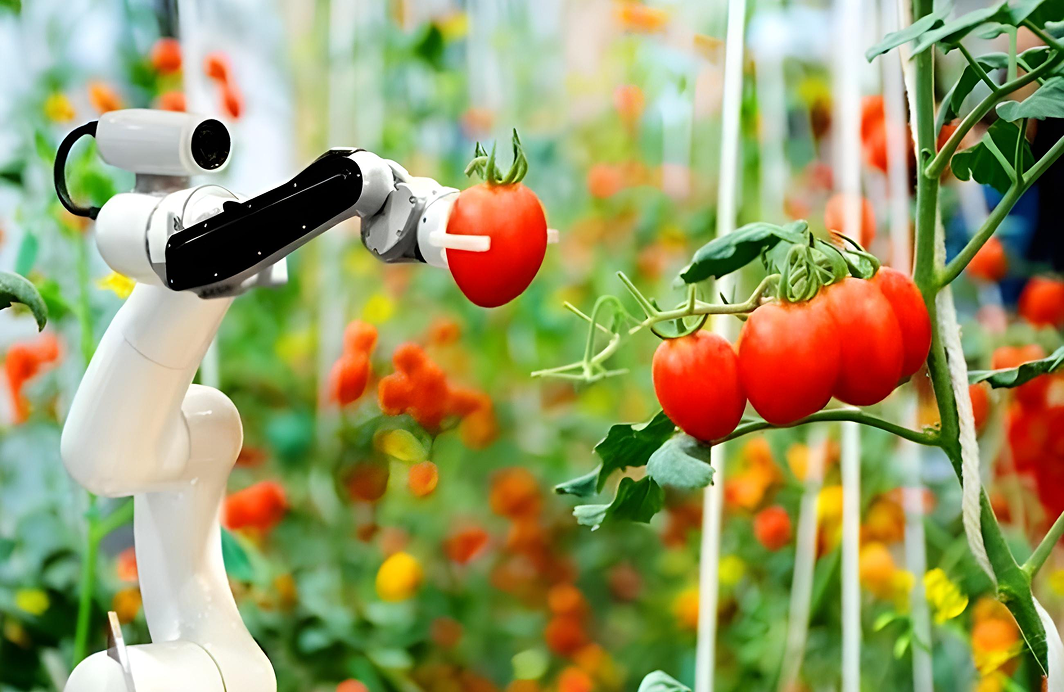

Agriculture Robotics

Agriculture Robotics

Smarter fields. Adaptive farming

Harvesting Robots

Autonomous Tractors

Drones for crop monitoring

Weeding/irrigation platforms

Simulate terrain and crop variability

Train plant health monitoring AI

Precision farming scenario testing

Energy & Utilities

Energy & Utilities

Robots that inspect, climb, and dive

Pipeline Crawlers

Substation Maintenance Bots

Wind Turbine Inspectors

Underwater ROVs

Wall Climbing Robots

Hazard modeling (heat, wind, pressure)

Validate remote maintenance operations

Train vision and control in extreme environments

Accelerate Your Robotics & Digital Twin Transformation

Fast track your robotics and digital twin transformation with scalable, physics driven environments.

Challenges Faced in the Industry

Warehouses and fulfillment centers are under constant pressure to fulfill increasing demand with maximum efficiency and minimal error. However, operational complexity, dynamic environments, and labor variability create significant challenges.

Key challenges include:

- Inadequate training data for robots in dynamic, multi agent settings

- Difficulty simulating edge cases such as collisions, human interaction, and visibility issues

- Safety concerns and costs of physical testing at scale

- Interoperability issues among fleets from different vendors

- Latency and failure risk in robot to robot or robot to server communication

AuraSim’s Solution

AuraSim enables warehouse robotics teams to design, simulate, and optimize every robotic subsystem from autonomous forklifts to AMRs and picking arms entirely in a virtual environment.

With AuraSim, you can:

- Generate diverse, realistic warehouse layouts using natural language

- Simulate multi agent interactions across fleets of AMRs, forklifts, drones, and robotic arms

- Create physics accurate, sensor rich synthetic data for training perception models (RGB, depth, LiDAR, thermal)

- Inject network latency, jitter, or dropout to stress test systems before deployment

- Benchmark performance under different edge/cloud compute configurations

Major Robotic Systems Simulated

System Coordination & Interoperability

Warehouse robots are coordinated through:

- Central orchestration systems (e.g., WES) for task allocation and routing

- Fleet management software that prevents congestion and optimizes task assignments

- P2P negotiation protocols to enable collision avoidance and local decision-makin

- Standardized APIs (e.g., VDA5050) to unify communication across vendors

AuraSim enables simulation of complex, coordinated workflows e.g., a forklift loading a tote picked by an AMR, guided by a central control system while avoiding human workers.

Modeling Network Latency in AuraSim

AuraSim includes native support for latency and network behavior simulation:

Use AuraSim to inject network failures, test edge/cloud strategies, and ensure your robots operate robustly under imperfect conditions.

System Coordination & Interoperability

AuraSim customers report:

- 60-80% reduction in time-to-deployment

- 30-50% drop in real-world testing costs

- 3×-10× higher coverage of edge cases

- 4× faster iteration on navigation, picking, and coordination policies

Use Cases

- Simulate robot to human handoffs and shelf restocking errors

- Train fleets to handle blackout zones or temporary reconfiguration

- Validate multi robot strategies under varying traffic and load levels

- Benchmark performance with sensor permutations or new hardware SKUs

- Model network partitioning and recovery logic in warehouse scale systems

Challenges Faced in the Industry

Modern manufacturing demands hyper efficiency, precision, and reliability especially in the face of labor shortages, supply chain volatility, and demand fluctuations. While robotics and automation are central to this transformation, traditional training and testing approaches are costly, slow, and rigid.

Key industry challenges include:

- Hard to reproduce failure cases in real production lines (e.g. robotic arm misalignment, sensor noise)

- Delays in integration between new robots and legacy PLC/SCADA systems

- High cost of physical prototyping for new robot workcells or layouts

- Need for deterministic, real time control under variable latency and noise

- Complex multi robot coordination involving conveyors, arms, mobile platforms, and vision systems

AuraSim’s Solution

AuraSim provides a generative simulation environment tailored for industrial robotics enabling fast prototyping, safe validation, and scalable synthetic data generation.

With AuraSim, manufacturers can:

- Rapidly simulate robotic cells including arms, conveyors, grippers, sensors, and human operators

- Generate synthetic datasets with precise control over noise, lighting, reflection, and occlusion

- Model time critical scenarios involving microsecond level latency requirements

- Train and validate motion planning, object detection, and collision avoidance algorithms in parallel

- Run “what if” simulations across robot configurations, task queues, and production rates

AuraSim bridges the gap between CAD and reality allowing you to test before you build, with physics backed confidence.

Key Robotic Systems Simulated

Multi Robot Coordination & Timing

Industrial automation often involves:

- Sequence controlled processes, where task execution follows strict stepwise logic

- Sensor actuator loops, requiring real time reaction to changes (e.g., part present → grip → move)

- Multi system handoffs, such as a robotic arm placing an item onto a mobile platform

- Tightly synchronized execution across PLCs, ROS nodes, and control policies

AuraSim enables modeling of:

- Precise clock synchronization between systems

- PLC ROS gateway simulation

- Dynamic cell reconfiguration logic in response to failure or bottlenecks

Deterministic Latency Modeling

In manufacturing, sub millisecond decision accuracy is crucial. AuraSim supports:

AuraSim allows injecting micro latency, processing delay, and actuator lag to simulate the full stack realistically and robustly.

Metrics-Driven Impact

AuraSim has empowered industrial robotics teams to:

- Cut simulation to deployment cycles by 60%

- Reduce physical testbed usage by over 40%

- Boost first pass success rates of vision+gripper systems by 3-5×

- Identify latency sensitive bottlenecks before hitting the floor

Use Cases

- Grasp detection benchmarking across surface textures, lighting, and product variants

- Sim2Real validation of robotic welding torch trajectories with varying metal profiles

- Assembly line tuning for throughput and buffer timing using AI planners

- Safety testing for human in loop systems using collision simulation and override scenarios

- Multi arm coordination, e.g., dual arm assembly and cooperative lifting

Challenges Faced in the Industry

Aerospace and defense robotics operate in mission critical, high stakes environments where failure is not an option. From autonomous drones and bomb disposal units to satellite servicing robots, these systems must be trained and validated for edge cases that are too dangerous, rare, or expensive to replicate physically.

Key challenges include:

- Extremely high safety, robustness, and certification requirements

- Sparse real world data for edge case simulation (e.g. GPS jamming, visual occlusion, adversarial environments)

- Need for multi modal sensing radar, thermal, infrared, LiDAR, RF

- Difficult to reproduce terrain and flight conditions

- Latency sensitivity for real time control in remotely piloted or autonomous missions

- High cost and risk of physical testing (e.g. UAV failure during a test flight)

AuraSim’s Solution

AuraSim provides a defense grade simulation platform for building, training, and testing aerospace and military grade robotic systems with adaptive fidelity and sensor realism.

With AuraSim, you can:

- Generate complex outdoor, aerial, underwater, or battlefield environments via text prompts or geo referenced layouts

- Simulate multi agent air and ground robotics (e.g. UAV swarms, UGV platoons, underwater robots)

- Integrate multi sensor pipelines (e.g. RGB, thermal, SAR, RF, LiDAR) for fusion based AI

- Model adverse weather, lighting, terrain, and interference with controllable parameters

- Evaluate systems under communication denial or jamming conditions

- Inject mission based constraints for swarm autonomy, precision targeting, or search and rescue

AuraSim brings military realism, test repeatability, and AI readiness to aerospace robotics

Robotic Systems Simulated

Multi Robot Coordination & Timing

AuraSim enables modeling of air ground sea space robotic integration, including:

- Swarm formation logic and dynamic target reassignment

- Multi agent path planning with deconfliction zones and prioritization rules

- Satellite ground relays and latency simulation across links

- Cross domain mission simulation (e.g. UAV identifies object → UGV investigates)

All coordinated using ROS based middleware, military grade protocol emulation (MQTT, DDS), and adaptive path planners.

Network & Latency Simulation

AuraSim allows fine grained modeling of:

- UAV command latency under satellite or line of sight conditions (200-600ms)

- Ground control handoffs across relay nodes

- Degraded bandwidth and jamming scenarios

- Sensor data sync in multi sensor fusion pipelines (thermal + LiDAR + radar)

- Satellite link dropouts, spoofing simulation, or GPS denial

Metrics Driven Impact

AuraSim enables aerospace and defense teams to:

- Reduce physical test costs by up to 70%

- Simulate >95% of mission critical edge cases in software

- Enable certification grade test logs using deterministic replay

- Achieve faster AI model hardening for safety critical vision and control modules

Use Cases

- UAV swarm navigation under GPS denied conditions

- Target detection in thermal + RGB fusion under smoke or fog

- Sim to real transfer for space docking sequence planning

- Search and rescue drone training with simulated terrain, collapsed structures, and visual occlusion

- Battlefield robotics coordination with low bandwidth and disrupted comms

- Autonomy fallback validation under multi node failure

Challenges Faced in the Industry

Agriculture and forestry robotics must operate in unstructured, open, and ever changing environments from fruit orchards and rice paddies to dense forests and mountainous terrain. These systems face weather, terrain irregularities, and biological variability that make physical testing and AI training highly unpredictable and expensive.

Key industry challenges include:

- Extreme environmental variability (lighting, seasons, soil, moisture, wind)

- Scarcity of annotated datasets for crops, weeds, diseases, and growth stages

- Unstructured terrain navigation, including mud, slopes, foliage, and obstacles

- Delicate interaction requirements, such as fruit picking or plant trimming

- Lowconnectivity areas, requiring on device inference and autonomy

- Multi robot coordination across wide, dynamic fields and forests

AuraSim’s Solution

AuraSim empowers agri tech companies to simulate diverse natural environments, crop cycles, and terrain generating synthetic data and testing robotic systems across edge cases too risky or seasonal to capture physically.

With AuraSim, agricultural and forestry robotics teams can:

- Generate detailed 3D scenes of fields, forests, orchards, and plantations with soil, plants, pests, and growth variability

- Simulate full seasonal cycles: planting → growth → harvest

- Model terrain dynamics (e.g. slopes, mud, waterlogging, erosion)

- Train perception systems on synthetic crop, weed, and disease data under variable lighting, occlusion, and growth stages

- Simulate robotic grasping, spraying, pruning, and harvesting

- Model fleet coordination, mission planning, and route optimization

AuraSim enables safe, scalable, and season agnostic training and validation for field ready robotics.

Robotic Systems Simulated

Multi Agent Coordination & Terrain Aware Autonomy

AuraSim supports simulation of:

- Dynamic terrain aware navigation, including slippage, wheel sink, and toppling risks

- Swarm coordination for field robots task allocation, failover, and real time status syncing

- Crop sensitive control adjusting actions by crop type, fragility, and ripeness

- Shared environment awareness between land and aerial robots

Examples include:

- A drone scans crop health, sending tasks to a sprayer bot

- A picking robot navigates canopy clutter based on seasonal foliage occlusion

- Fleet based harvesting with shared path and load balancing

Connectivity & Real Time Constraints

Rural environments often lack high bandwidth, low latency infrastructure.

AuraSim allows simulation of:

- Offline first autonomy, including preplanned mission execution and anomaly escalation

- Edge vs cloud compute strategies under poor or intermittent connectivity

- Delay tolerant coordination, e.g., between drones and tractors using buffer synchronization

- Sensor fusion in varying ambient conditions (fog, glare, dust)

Typical latency constraints:

Metrics Driven Impact

Agri-tech teams using AuraSim report:

- 5× increase in perception model generalizability across crop types and growth stages

- 60-80% reduction in field testing cycles

- Training data cost savings of up to 70%

- More robust Sim2Real performance, even in adverse weather and terrain

Use Cases

- Ripeness classification and grasp planning across lighting, angle, and occlusion conditions

- Path planning for autonomous tractors under muddy or eroded terrain

- Weed vs crop segmentation in cluttered fields with seasonal variation

- Multi robot spraying coordination, modeled for wind, overlap, and dosage

- Drone to ground communication testing with intermittent sync and retry logic

- Training AI to detect plant disease or pests across synthetic crop datasets

Challenges Faced in the Industry

Space robotics must operate in ultra harsh, remote, and resource constrained environments often with no room for failure and limited or delayed ground control. From planetary rovers and robotic arms on the ISS to future in orbit assembly bots and lunar landers, the cost and risk of physical testing are prohibitive.

Key challenges include:

- Lack of real world test environments that accurately replicate microgravity, vacuum, and extraterrestrial terrains

- Severe communication latency (up to 20 minutes one way to Mars)

- Complex multi sensor processing (stereo cameras, LiDAR, force sensors, IMUs)

- Unstructured terrain navigation with dust, rock, slope, and lighting variability

- Need for autonomous decision making under delayed or intermittent contact

- Ultra high validation standards for mission assurance and space agency compliance

AuraSim’s Solution

AuraSim enables space robotics teams to simulate planetary, orbital, and deep space missions with high physical and visual fidelity supporting full stack validation from perception to control.

With AuraSim, you can:

- Simulate low gravity and vacuum environments with realistic physics

- Generate planetary terrains (e.g. Moon, Mars, asteroids) with controllable features like dust, rock density, and elevation maps

- Model robotic arms, rovers, hoppers, and landers with fine grained kinematic fidelity

- Integrate multi sensor inputs (e.g. stereo vision, LiDAR, radar, tactile)

- Inject command latency, sensor noise, and actuator lag to test autonomy stack robustness

- Support Sim2Real workflows for perception, SLAM, motion planning, and manipulation

AuraSim brings the unreachable within reach enabling “mission rehearsal” at software speed.

Key Systems Simulated

Inter Robot & Ground Coordination

In space, coordination must account for:

- Intermittent uplinks and delay tolerant communication protocols

- Autonomous decision making without human in the loop fallback

- Synchronized operation among multiple robots (e.g., lander deployer rover)

- Command sequencing and safety interlocks

AuraSim allows you to simulate:

- Simulated Earth Moon (~1.3s) and Earth Mars (~7-22min) latency profiles

- Solar panel shading, eclipse simulation, and thermal fluctuation modeling

- Force feedback delay in teleoperation sequences

- Realistic dust occlusion, rock collision, and wheel slip modeling

- Orbital mechanics simulation for satellite rendezvous or debris tracking

Latency & Environmental Modeling

AuraSim supports:

- Simulated Earth Moon (~1.3s) and Earth Mars (~7-22min) latency profiles

- Solar panel shading, eclipse simulation, and thermal fluctuation modeling

- Force feedback delay in teleoperation sequences

- Realistic dust occlusion, rock collision, and wheel slip modeling

- Orbital mechanics simulation for satellite rendezvous or debris tracking

Metrics Driven Impact

With AuraSim, space robotics teams can:

- Reduce mission risk by simulating 90%+ of failure modes

- Conduct continuous training for autonomous stacks, even during transit

- Support certification grade validation for space agency compliance

- Eliminate >70% of physical test cycles, saving time, cost, and launch mass

Use Cases

- Ripeness classification and grasp planning across lighting, angle, and occlusion conditions

- Path planning for autonomous tractors under muddy or eroded terrain

- Weed vs crop segmentation in cluttered fields with seasonal variation

- Multi robot spraying coordination, modeled for wind, overlap, and dosage

- Drone to ground communication testing with intermittent sync and retry logic

- Training AI to detect plant disease or pests across synthetic crop datasets

Case Studies & Resources

Trusted by the world’s top robotics teams, AuraSIM delivers the accurate generative physics simulation and synthetic data at unmatched scale and speed .powering breakthroughs in robotics, autonomy, and intelligent systems.

Talk to an Industry Specialist

.png)