For decades, the dream of building truly intelligent, general purpose robots has been bottlenecked by a fundamental problem: data. How can we teach a robot to navigate the near infinite complexities of the real world? The traditional answer has been simulation. But today’s simulators, built on graphics engines, are fundamentally limited.

The core challenge is that these simulators are not reality; they are hand crafted approximations. Creating high fidelity 3D assets and environments is an immensely costly and labor-intensive process, requiring artists and engineers to manually build every object, texture, and space. This creates a scalability problem, it's simply not feasible to manually create the sheer diversity of scenarios a robot will encounter in the wild.

Furthermore, these environments suffer from a persistent "reality gap." The physics are based on parameterized equations that approximate, but never perfectly replicate, real world dynamics. The way light reflects, how objects deform, and how sensors perceive the world are all based on human written rules. These subtle but significant differences mean that skills learned in the pristine, predictable world of a traditional simulator often fail when transferred to a physical robot in the messy, unpredictable real world.

At AuraML, we believe it's time for a new paradigm. Instead of manually building a simulation of the world, what if we could learn one?

Today, we are thrilled to announce Project Maya, our core initiative to build a generative multi modal world model. Named after the powerful concept in Hindu philosophy representing the beautiful, virtual illusions of the physical world, Project Maya aims to learn and generate the very fabric of reality itself. It will be a foundational model capable of generating endless, interactive, and physically plausible 3D worlds and multi sensor data on demand. This isn't just a better simulator; it's a stepping stone to the next generation of robotics and physical AI.

Why Now? The Generative AI Inflection Point

This ambition, once the stuff of science fiction, is now within reach thanks to a powerful convergence in AI research.

- Massive Scale Models: Recent breakthroughs have proven that with enough scale, models can learn complex, emergent behaviors from data. Foundational models with billions of parameters have shown they can generate controllable worlds from video alone, without needing explicit action labels.

- Advanced Architectures: The rapid evolution from early generative models to state of the art Latent Diffusion Models gives us the tools to generate high fidelity, stable, and controllable environments.

- Vast Data Streams: The world is awash in video and sensor data. Pioneering research labs are demonstrating how to create real time interactive experiences from real world footage, proving that the raw material to learn the patterns of reality exists at a massive scale.

These breakthroughs have paved the way. But to build a simulator for robotics, we need to go further.

The Maya Architecture: A Glimpse Inside

Maya is designed from the ground up for the unique challenges of robotics. It moves beyond the video-in, video-out paradigm of its predecessors to embrace the full sensory experience of a physical agent.

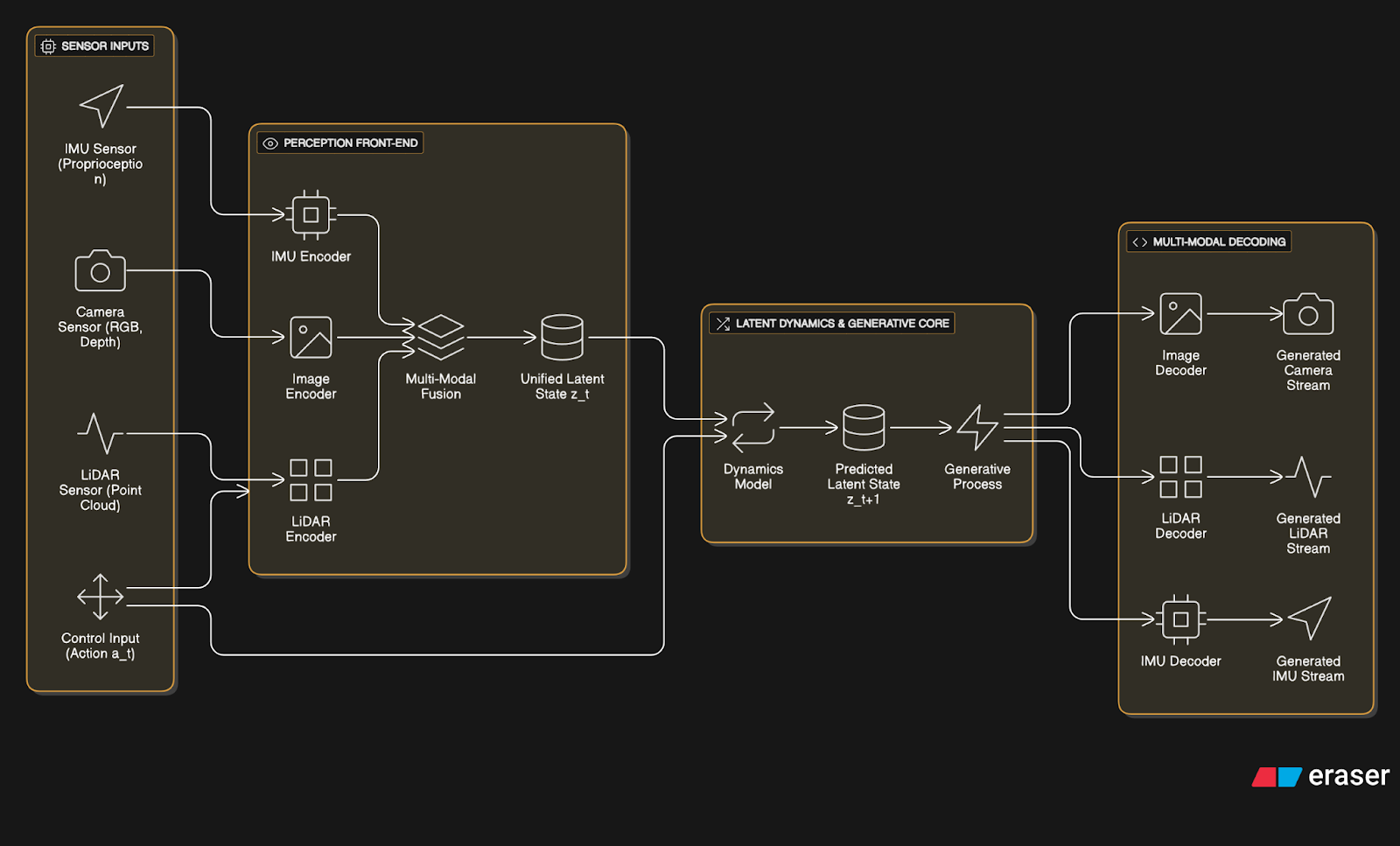

You can think of its architecture in three parts:

- The Perception Front End (The Senses): A robot perceives the world through a suite of different sensors. Maya will be truly multi modal, ingesting and fusing data from cameras (rich color and texture), LiDAR (precise 3D geometry), depth sensors, and the IMU (the robot's own sense of motion). Using advanced fusion techniques, it will combine these disparate streams into a single, holistic understanding of the world at any given moment.

- The Predictive Core (The Engine of Reality): This is the heart of Maya. It will be a large scale, action conditioned diffusion transformer. In simple terms, it takes the current state of the world and a specific control command from the robot (not just a keyboard press, but a continuous command like "x_vel : 0.5 m/s" coming from a ROS2 node) and predicts the next few seconds of the future. It learns the cause and effect of the physical world.

- The Simulation Back End (The Dream Weaver): The model then renders this predicted future not just as a video, but as a complete, multi modal sensor stream. It generates the synthetic camera frames, LiDAR point clouds, and depth maps that the robot would have perceived in the real world. This high fidelity, synthetic data becomes the training ground for our AI agents.

What Makes Maya a Leap Forward?

We are standing on the shoulders of giants, but Maya's design incorporates several key innovations to tackle the specific needs of robotics:

- Beyond Simplified Physics: We are not learning to simulate environments with simplified, pre defined rules. By training on vast logs of real world robotic data, Maya will learn the messy, nuanced physics of reality.

- True Multi Modal Understanding: Fusing multiple sensor types is a core design principle. This will allow Maya to build a far richer, more robust, and geometrically accurate model of the world than is possible with video alone.

- Embodied, Continuous Control: Robots don't operate with discrete keyboard commands. Maya will be conditioned on the continuous control signals that drive real robots, allowing it to function as a true "digital twin" at a behavioral level.

- Engineered for Stability: A known challenge for generative simulators is "drift," where small errors compound over time, causing the world to become unstable. We are integrating cutting edge techniques, such as context corruption during training, to ensure our generated worlds remain coherent and physically plausible over long horizons.

The Road Ahead

Building Maya is a monumental undertaking a long term research initiative that will require tackling immense engineering and scientific challenges. But the destination is worth the journey.

We envision a future where roboticists can train agents in an infinite curriculum of novel, diverse, and challenging worlds, generated in an instant. A future where the sim to real gap is finally closed, allowing us to deploy more capable and reliable robots safely into the real world.

Maya is more than a project. It is our commitment to building the foundational tools that will unlock the next wave of progress in artificial intelligence.

Join us.

Other Blogs You Might Be Interested In

.png)